October 2019 Update: Google Lens rolls out new “style ideas.” When users point the lens at clothing, fashion items, and furniture, the app gives style inspiration for similar items.

AI is all around us. According to a 2018 Gallup report, 85% of Americans use at least one of six devices, programs or services that feature AI elements. Many of us are already using voice search in our day-to-day lives, depending on Alexa or Siri to answer pressing questions like “what’s the weather?” or “how tall is the Eiffel Tower”?

Google Lens is one of the newest additions to the world of AI services. Introduced in 2017 at Google I/O, Google Lens is an image recognition mobile app that can tell you about an object, landmark, or product by scanning it through a lens.

Who can use Google Lens? How well does it work? And how can marketers optimize their efforts for visual search?

In this guide, we’ll answer your Google Lens questions and let you know what it means for your marketing strategy.

How do you activate Google Lens?

For Pixel users, Google Lens is already active in the camera.

For some Android users, you can access Google Lens through Google Assistant. If your Android device doesn’t support Google Lens through Google Assistant, you would need to access it through Google Photos. There is also a standalone Google Lens app that works with some Android devices.

For iOS users, Google Lens can be accessed through Google Photos or the Google app.

How do you use Google Lens?

For Pixel users, Google Lens is available right in the camera. Just point the camera at the object you want to find out more about, and tap the Google Lens icon at the bottom of the screen.

As of recently, Google Lens was only available to non-Pixel users through the Google Photos app. Users would have to take a photo, and then open the Google Photos app to use the Google Lens feature.

However, in December Aparna Chennapragada, the VP of Google Lens & AR at Google, Tweeted an announcement that Google Lens would be available on iOS through the Google app:

You’ve always wanted to know what type of 🐶 that is. With Google Lens in the Google app on iOS, now you can → https://t.co/xGQysOoSug pic.twitter.com/JG4ydIo1h3

— Google (@Google) December 10, 2018

Using Google Lens became a lot easier for iOS and other non-Pixel users. When you open the Google app, the Lens icon now appears in the search bar next to the mic icon. Simply point the camera at the object you want to know more about, and the app will try to identify what it is.

How well does it work?

We tested Google Lens on items around our office to see how well it worked in each of its key abilities: text, furniture, plants and animals, and landmarks.

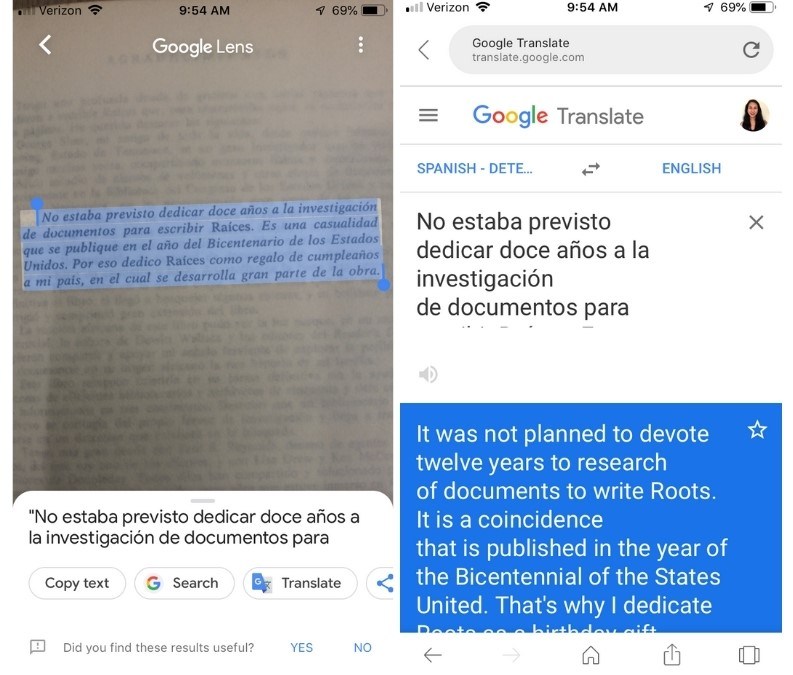

Text

Google Lens’ translate feature tested well, highlighting text from the Spanish version of Roots and pasting it into Google Translate:

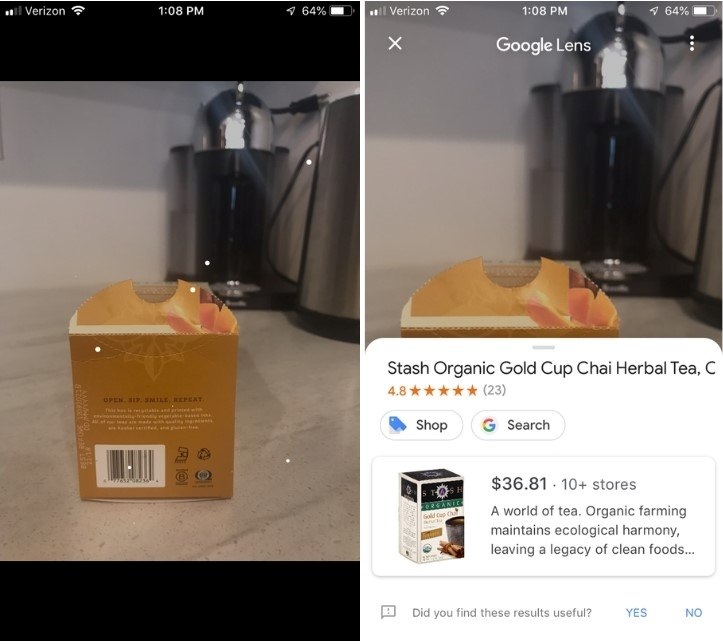

It also scanned and identified a product barcode of chai tea successfully.

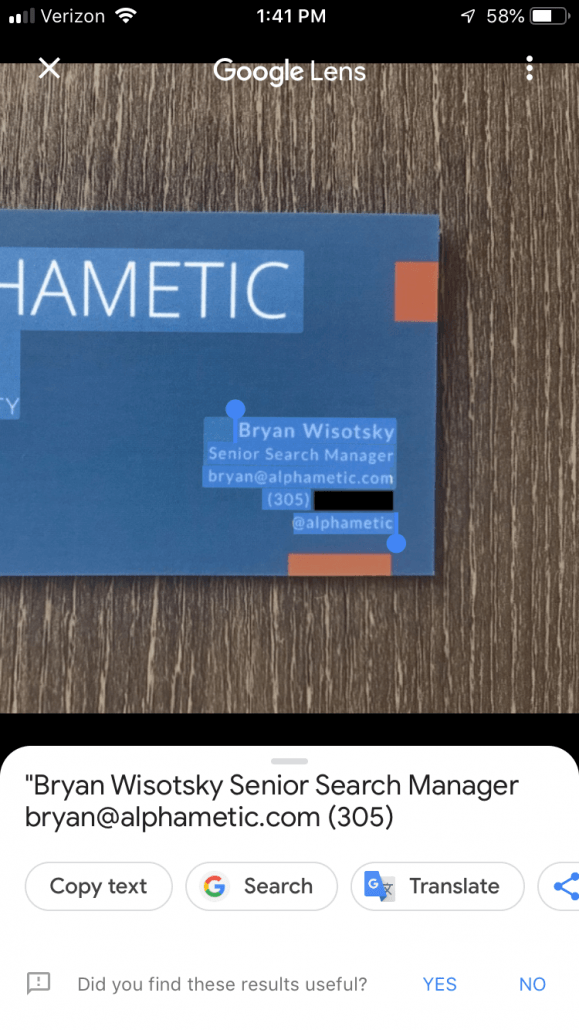

However, Lens failed on our business card test. It is supposed to be able to capture the contact information and give the option to save it directly to your Contacts. However, when we tested it on Bryan’s business card, it more or less was able to copy the text but didn’t give the option to add the contact information.

Furniture

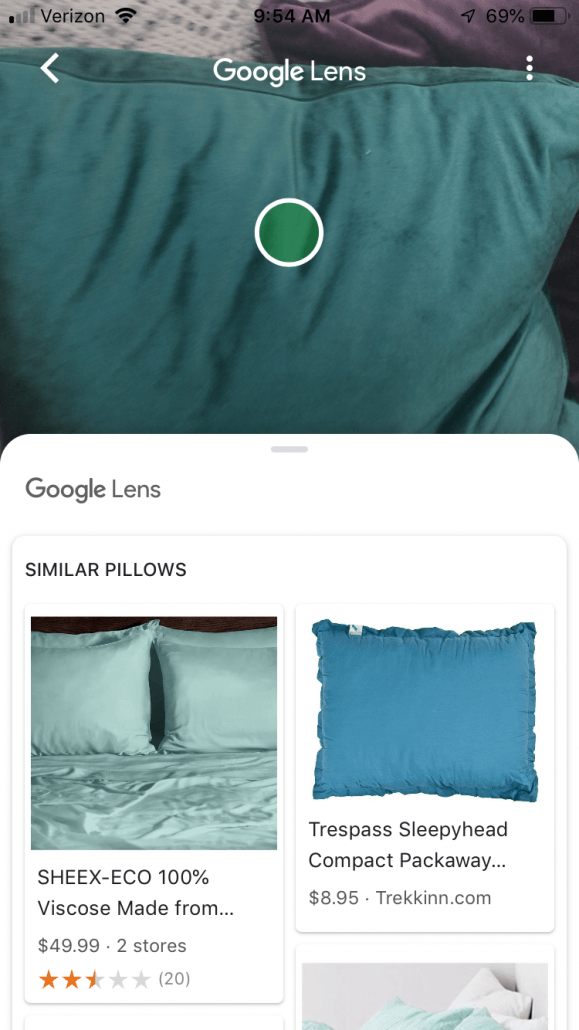

When we pointed it at a throw pillow, Lens was able to give suggestions for similar items.

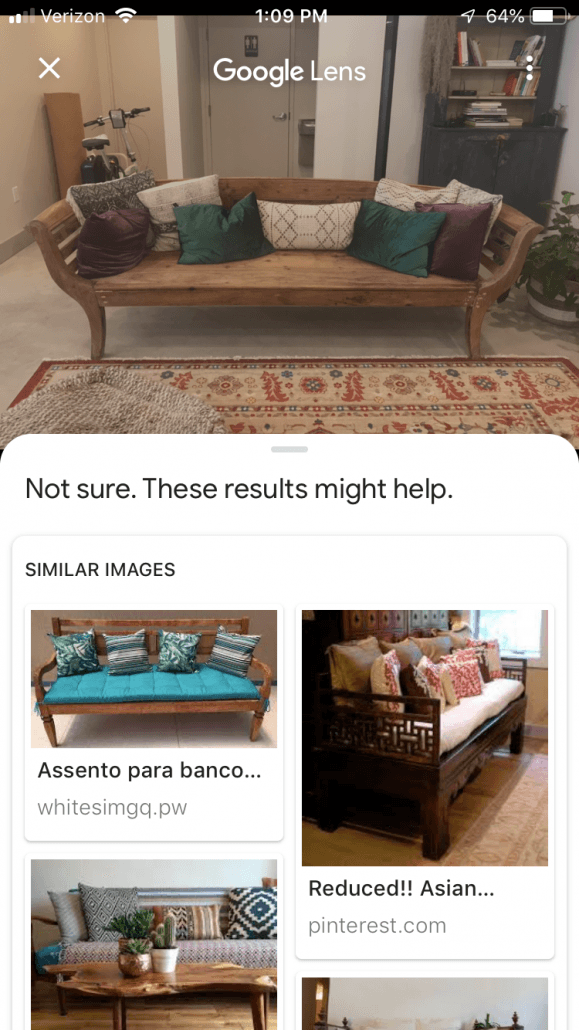

However, when we pointed it at a bench, it pulled up photos of benches with pillows on them, instead of the bench alone.

If I wanted to buy a similar bench, I would have to take the pillows off and take a picture of just the bench. This is because Lens uses machine learning to analyze the pixels it has in front of it. While it’s computer vision is smart, it isn’t yet smart enough to dissect the pixels into individual objects. Still pretty impressive though.

Plants and animals

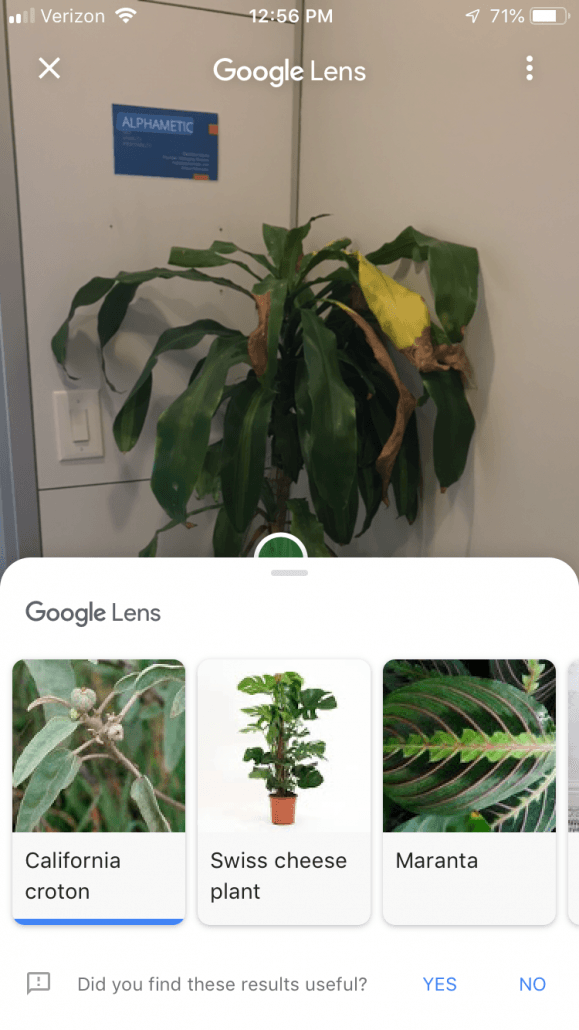

We took a picture of a tropical plant, and Google Lens gave us some results for similar plants. However, we decided to take the picture again, and it gave us completely different results.

Aparna Chennapragada explains in her blog post that this happens because “what we see in our day-to-day lives looks fairly different than the images on the web used to train computer vision models.” The photo I took on my smartphone is very different from the photo that a professional photographer took in the wild, and this confuses Google Lens. However, Chennapragada says that Google is addressing this by “training the algorithms with more pictures that look like they were taken with smartphone cameras.”

What about animals? Mashable sent a team to a “Corgi meetup” to test how well Google Lens can identify the various Corgi breeds. They snapped pictures of 20 Corgis, and concluded that Google Lens only got 11 of the Corgi breeds correctly, so about 50% accuracy.

Locations

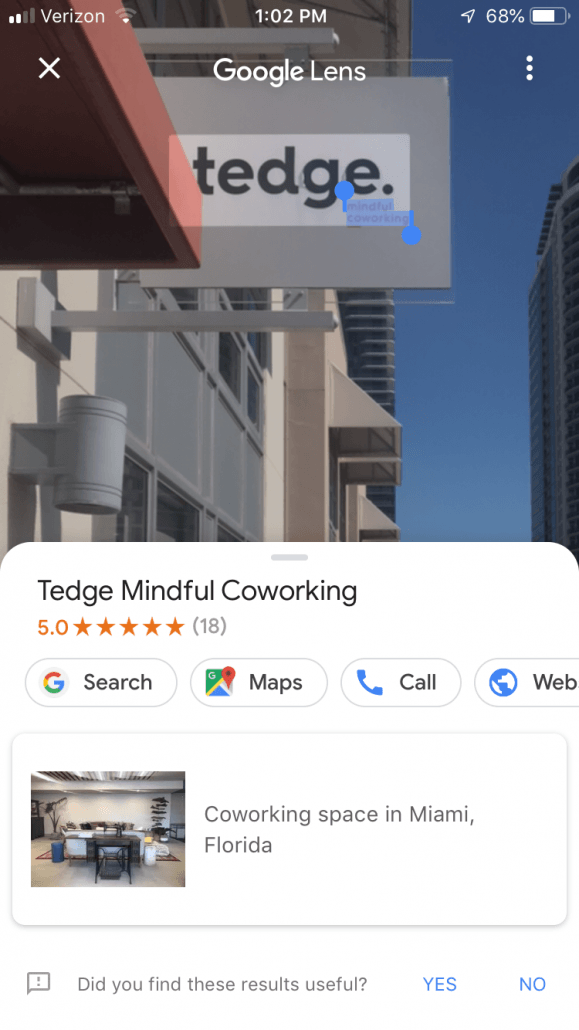

When we tested Lens on the sign of our coworking space, it was able to pull up ratings, a search option, Google Maps, and contact information.

So what can we conclude from testing Google Lens?

It’s an impressive feature that performed pretty well on most of our tests. However, if you’re looking for very specific information on what you’re observing, or need it to key in contact information from a business card, the feature still has a ways to go.

What does this mean for marketers?

With its wider accessibility and ease of use, visual search products such as Google Lens will likely find a place alongside voice search as a replacement to text-query search. Now that we understand a bit better how Google Lens works, what can we do to ensure that our marketing efforts are optimized for those using visual search?

- Brand imaging: Make sure that your logo on products, signage, and any other advertising media is consistent and clear. If you have a storefront, make sure that your signage is visible from the street, which is where customers will likely be using Google Lens.

- Meta data: To optimize image search results, make sure that your metadata is detailed and accurate. Use descriptive alt tags, captions, and surrounding text content on your images.

- Image file: Google Image Search will also take image file data into account when deciding whether it should show up in the results. Make sure to use a descriptive image filename, and pay attention to your image dimensions and size. Rand Fishkin’s advises in his article on how to rank on Google Image Search that Google favors images with regular dimensions (not very horizontal or very vertical), and size (not too small and not too big).

- Image count: Use a lot of images on your website, especially for ecommerce, to increase your chances of being “matched” by Google correctly when someone uses Google Lens.

Whether you’re selling a product, service, or other sort of experience, it’s important to be easily found by your audience. Google Lens adds to the ways that people can interact with the world around them and presents marketers with another opportunity to get their attention. From what we can tell Google Lens will only keep improving and users will continue to grow. Stay ahead of the curve by positioning yourself to be found through visual search.

Matthew Capala is a seasoned digital marketing executive, founder/CEO of Alphametic, a Miami-based digital marketing agency, author of “The Psychology of a Website,” dynamic speaker, and entrepreneur.